History of Security Incidents of Solana

A full stack of 12 key incidents that shaped Solana’s path from chaos to maturity

Hello!

In the realm of Blockchain, speed and scalability is often achieved at the cost of security. The stakes of vulnerabilities escalate as the decentralized network expands in complexity and value. For Solana, a high-throughput, low-latency blockchain and journey to resilience has been paved with both innovation and hard lessons.

Since its mainnet launch in 2020, Solana has emerged as a major player in the layer 1 ecosystem, attracting millions of users, billions in assets, and thousands of developers. However, with this rapid growth, it faced a series of security challenges like devastating application exploits, critical bugs in core protocol components, and sophisticated supply chain attacks on widely adopted libraries.

Each incident has tested not just the technical foundation of Solana, but the resilience and coordination of its community and leadership.

Security incidents like the ‘$320 million Wormhole hack’, vulnerabilities in wallet software like Slope, and the targeted compromise of Solana/web3.js showcase the diverse and evolving threat landscape faced by modern blockchain ecosystems. Yet, each breach had offered critical insights into system design, incident response, and community trust, shaping how Solana rebuilt itself overtime.

This article presents a comprehensive, data-driven analysis of Solana’s security journey, categorizing major incidents, investigating root causes, evaluating repercussions, and tracing the evolution of response mechanisms.

I aim not only to document the vulnerabilities of Solana, but also to spotlight the network’s ongoing maturation towards a more secure and resilient future.

Let the investigation begin🔎

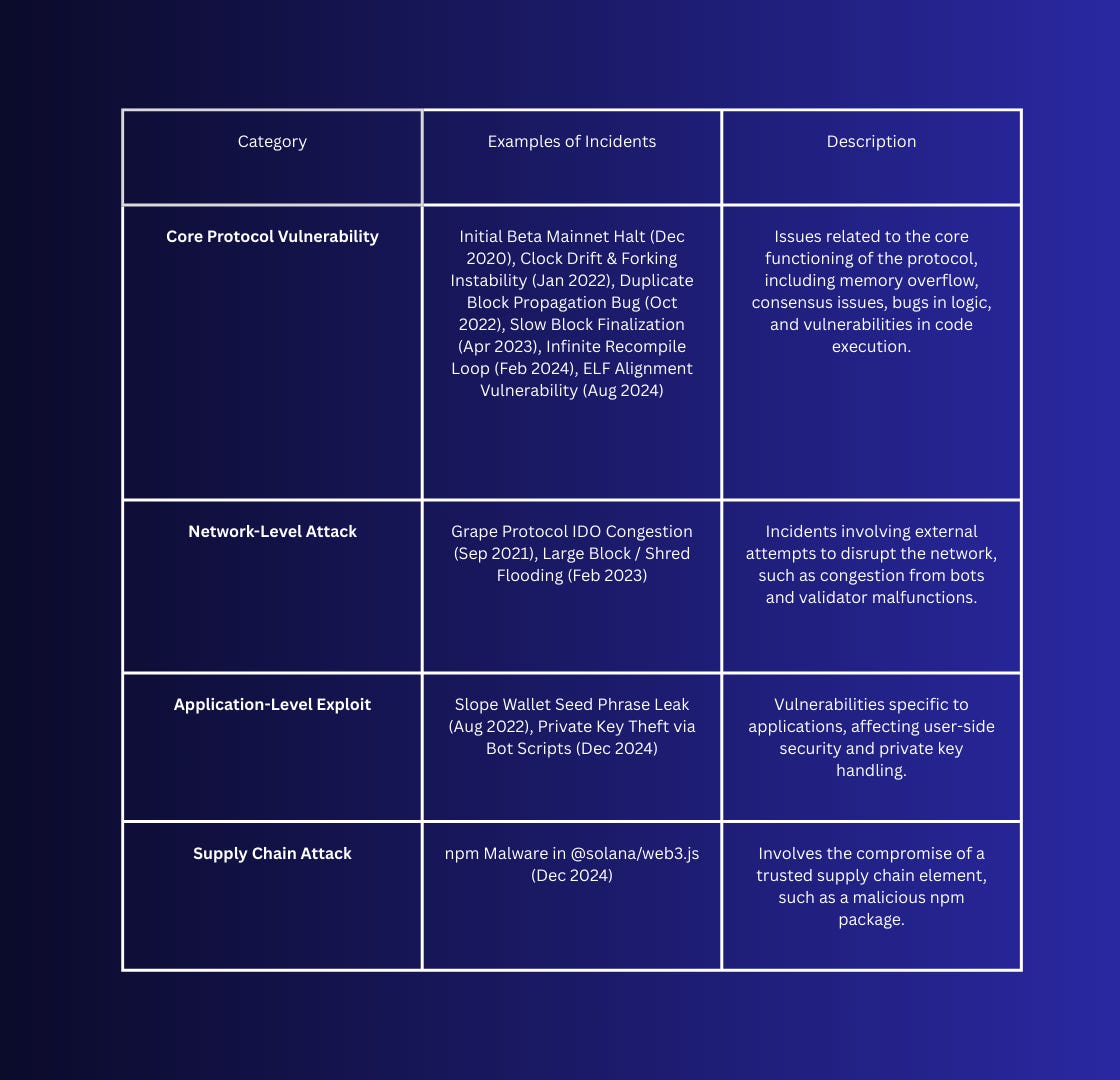

Classification of Security Incidents

Security challenges in Solana’s history have emerged from a wide range of vectors, each with distinct technical causes and consequences. To build a structured understanding, let’s classify these incidents into clear categories like spanning application-level exploits, supply chain compromises, core protocol vulnerabilities, and network-level attacks.

Liveness vs Safety: Why Solana Chooses to Halt

In distributed systems, trade-offs are inevitable. It is this more evident in blockchain networks.

According to the CAP theorem, distributed systems can only guarantee two of the following three properties: Consistency, Availability, and Partition Tolerance.

Blockchains, by nature, must tolerate network partitions. Dropped messages, latency spikes, and validator desynchronization are all part of reality. This forces Solana to choose between Consistency (every node agrees on the same state) and Availability (the system always responds to requests). Solana chose Consistency over Availability, making it a CP (Consistency + Partition Tolerance) system.

There exist practical consequences in this approach. When Solana encounters a critical issue, whether it’s a consensus bug, validator misbehavior, or a denial-of-service event, the network may come at full stop. Transactions get paused, blocks don’t get produced, and user-facing applications appear frozen.

These events are classified as liveness failures, where the system isn’t progressing, but the ledger’s state remains intact and protected. Put simply, Solana prefers to shut down temporarily rather than operate incorrectly.

With safety failure, the system may continue to operate but can also create conflicts with histories or enabling double-spending. Solana accepts safety over liveness, preserving the chain’s correctness and user funds in situations where continuing operation can pose unacceptable risk.

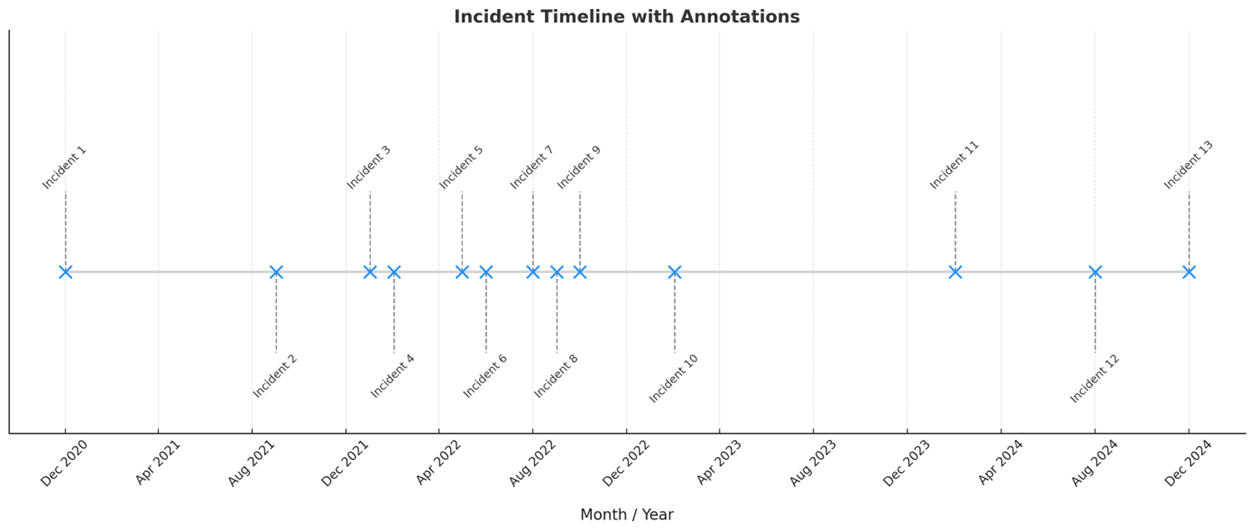

Let’s look at Solana’s security issues in chronological order.

Outage Instances

The following section will highlight key incidents, their root causes, and the networks’ subsequent improvements, offering insights into how Solana has evolved to enhance stability and resilience over time. These sections address not only network outages but also security incidents in Solana’s history.

For non-technical folks, think of Solana as a high-performance supercar. We attempt to offer intuitive and insightful non-technical summaries using this metaphor at the beginning of each incident briefing. Check it out!

Coming back, Solana was launched on mainnet on March 16th ,2020. Let’s cover some incidents since then.

Incident #1: The Turbine Block Propagation Bug

Imagine Solana as a Ferrari speeding on a racetrack. Suddenly, the car’s GPS (used to track its laps) glitches and shows two different routes for the same lap. Half the crew thinks it’s on one path, the other half thinks it’s on another. No one agrees on what lap it’s on, so the car safely pulls over until everyone have their maps fixed.

In December 2020, Solana faced its first major network outage due to a flaw in its block propagation layer, known as Turbine. This incident resulted in roughly six hours of downtime, marking one of the earliest large-scale interruptions on the network.

Solana uses a multi-layer block propagation mechanism called Turbine to broadcast ledger entries to all nodes. Block propagation is the process of disseminating newly created blocks of transactions to all nodes in the network.

The root cause is - how validators track and propagate blocks.

Specifically, when one validator mistakenly produces and shares two different blocks for the same slot, the network split into three partitions.

Partition A and B each receive a different version of the block

Partition C detect the inconsistency independently

Because none of these partitions held a supermajority of stake, consensus stalled. Validators used slot numbers (u64 integers) as identifiers for both blocks and state transitions, which led to each partition incorrectly assuming that they held the same block as the others. This misinterpretation caused:

Nodes with block A to reject forks based on block B

Nodes with block B to reject forks based on block A

With divergent state transitions and no reliable block repair mechanism, finality became impossible.

Engineers implemented several key changes:

Tracking block by hash, not slot number, to uniquely identify diverging forks

Improved fault detection inside Turbine

Propagation of faults via the gossip network to ensure validators detect inconsistencies faster

The protocol upgraded by being able to manage creation of multiple blocks for the same slot. It allowed validators to repair and reconcile forks without falling into an unrecoverable state.

Although the bug triggered the halt, most of the delay was caused by the challenge of restoring stake participation. Solana requires approximately 80% active stake to resume block production and coordinating validator restarts takes time.

This incident revealed issues with early block propagation and state tracking. It prompted Solana to handle fork resolutions going forward.

Incident #2: Grape Protocol IDO Congestion Outage

In a big race, thousands of fans crowd the track, all trying to snap a selfie with the Ferrari. It’s so crowded that the car can’t move. Even worse, someone jams the brakes by messing with a critical part under the hood. The engine although doesn’t fail, but the traffic is so bad that the car must stop, cool down, and wait until the track is clear.

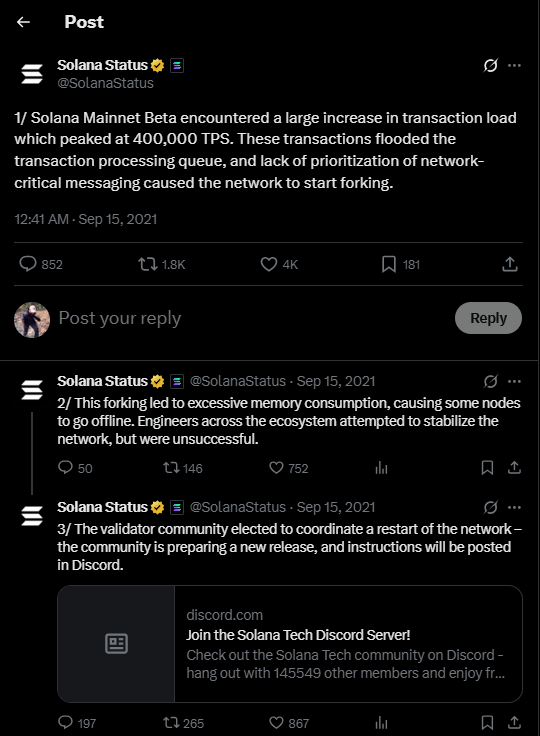

On September 14, 2021, Solana experienced its longest downtime to date. A 17-hour halt, triggered by bot activity during Grape protocol’s initial DEX offering (IDO) on Raydium AcceleRaytor.

Within minutes of the IDO going live, a flood of automated bot transactions swarmed the network generating over 300,000 TPS at the validator level. Network interfaces were saturated, exceeding 1 Gbps bandwidth and 120,000 packets/sec, causing packet loss before transactions even reached validators.

A particularly disruptive bot exploited Solana’s transaction model by issuing spam that write-locked 18 key accounts, including the SPL Token program and Serum DEX. This disabled Solana’s core advantage: parallel transaction execution. Instead, transactions queued behind these locks, effectively serializing the entire system.

This event exposed multiple bottlenecks, prompting critical upgrades:

Disabled write locks on programs, allowing transactions to proceed in parallel

Rate-limiting on transaction forwarding to prevent overload propagation

Configurable RPC retry behavior, helping dApps reduce traffic under congestion

Prioritization of vote transactions, ensuring consensus can progress even under load

The reboot process also uncovered an unrelated bug: an integer overflow during stake calculations. Due to an inflation miscalculation, the system accidentally generated stake values that exceeded a 64-bit unsigned integer, destabilizing validator state tracking. This bug was identified and patched before the final network restart.

This incident revealed how economic incentives (IDO participation) and architectural limitations (transaction locks, spam-forwarding) could combine to trigger systemic failure. It also made clear that transaction volume alone is not enough to assess network health. The type of transaction and how it interacts with shared state is equally important.

Solana's response demonstrated a growing maturity: not only were protocol-level changes implemented, but toolkits for developers and validators were improved to handle future spikes with more grace.

Incident #3: High Congestion Event

The Ferrari did not stop, but it was stuck in traffic for days. Fans kept sending duplicate requests for selfies, clogging the road. The car’s engine and systems still did not fail, but it crawled painfully, overheating from the repeated load. Engineers tweaked the filters to ignore spammy fans and let real traffic clear faster.

In January 2022, Solana’s mainnet experienced severe congestion due to an influx of duplicate transactions, causing performance degradation and reduced throughput. It faced multiple partial and full outages, with a significant 18–48-hour disruption due to high compute transactions and excessive duplicate transactions.

Transaction success rate plummeted by 70%, and block processing delays caused forks that further fragmented validator consensus. The network did not experience full downtime, amid impaired performance of the network.

At the core of the issue was the inability of the runtime to efficiently filter and discard spammy duplicate transactions. The transaction verifier and execution layer were overwhelmed, highlighting inefficiencies in Solana’s compute and memory architecture, especially under complex transaction loads.

The situation worsened between January 21-23 when the public RPC endpoint was taken offline due to spammed batched RPC calls that exceeded system handling capacity.

Solana improved cache exhaustion caused by repeated program executions and improved Sysvar caching, optimized SigVerify discard and deduplication.

From this incident, it can be learned that throughput failure can occur not from lack of resources but from inefficient processing and validation mechanisms. RPC endpoints need abuse resistance, especially during high user activity periods.

Users experienced severe delays, failed transactions and inability to interact with dApps during congestion peaks.

Incident #4: Wormhole Exploit

A thief cloned the Ferrari’s garage key and drove off with a brand-new car, without paying. The garage owner replaced the stolen car (out of his pocket) and installed biometric locks to prevent future break-ins.

On February 2nd, 2022, a critical vulnerability in the Wormhole bridge allowed an attacker to forge messages and mint 120,000 wrapped ETH (~$326M) on Solana without depositing ETH on Ethereum.

The exploit stemmed from bypassed signature verification in the Wormhole smart contract. The attacker uploaded a custom sysvar account and tricked the system into thinking it was a legitimate guardian-signed message.

The incident caused:

$326M stolen in fake wrapped ETH

Confidence declines in cross-chain bridges

Users who held wormhole’s wETH were holding unbacked tokens until replenished

The Wormhole team paused the bridge. Jump Crypto and Wormhole’s backer stepped in and replenished 120,000 ETH from its treasury.

Engineers fixed smart contracts to verify guardian signature correctly. It enabled long-term focus on Zero-Knowledge proofs for bridge security.

Users were fully reimbursed by Jump Crypto.

Incident #5: Candy Machine Outage

Thousands of scalpers caught wind of a limited-edition autograph session (NFT mint via Metaplex’s Candy Machine) and stormed the track. Their goal? Be the first to shove a paper under the Ferrari's pen and flip it for profit.

They flooded the track, tossing so many papers at it that the car’s memory overheated, causing it to stall. In response, engineers installed smart filters, introduced penalties for disruptive behavior, and rewired the engine to recognize and prioritize genuine fans.

The outage happened between April 30th to May 1st, 2022. Solana faced an 8-hour network halt after an extreme spike in transaction volume driven by bots trying to mint NFTs through Metaplex’s Candy Machine. Nodes received up to 6 million transactions per request per second, pushing over 100 Gbps of data per validator, far beyond the network capacity.

The root cause lay in Candy Machine’s first-come-first-served mint mechanism, which incentivized spam. Transactions competing for the same accounts created lock contention, leading to abandoned forks and overwhelming validators.

It caused:

Full network halt for 8 hours

Validators required manual coordination to restart the network

Significant delay for NFT mins, dApp operations and user transactions

The network restarted after a snapshot agreement. Metaplex penalized $0.89 or 0.01 SOL for invalid transactions to deter future spam. Here is Github link.

Solana introduced structural upgrades like:

QUIC protocol replaced UDP to allow fine-grained traffic control

Stake-Weighted Quality of Service (SWQoS) prioritized transactions from staked validators

Priority Fees let users pay more to prioritize urgent transactions

The network learned first-come-first-served incentives spam transactions leading to vulnerabilities.

No fund losses, but users missed NFT mints or were forced to pay high fees to compete.

Incident #6: Durable Nonce Bug

A bug let the Ferrari mistake an old pit stop as a new one, confusing the mechanics. Some thought the lap was finished, others didn’t. The car couldn’t race until everyone agreed on where it actually was. Engineers rewrote the rules so pit stops are tagged without a mistake.

On June 1st, 2022, a bug in Solana’s durable nonce transaction handling allowed the same transaction to be processed twice, once as a regular and once as a durable nonce transaction. This non-deterministic behavior split the validator set: some rejected the duplicate, and others did not.

This broke the two-third consensus threshold, halting the network.

Durable nonce on Solana uses a dedicated on-chain account to store a nonce (number used once), allowing transactions to be signed with a stale blockhash. The nonce account’s authority updates it with a recent blockhash via an “advance nonce” instruction just before submission, extending the transaction’s validity beyond the typical blockhash lifespace for delayed or automated execution. This mechanism enhances transaction flexibility and resilience against network congestion.

This incident caused 4.5 hours of downtime of network, temporary loss of ability to use durable nonce transactions (used by cold wallets, exchanges).

Solana devs separated nonce/blockhash domains to eliminate ambiguity.

The lesson learned from this incident is that subtle bugs in nonce can lead to consensus splits. And validators must always process transactions identically.

Incident #7: Slope Wallet Incident

One valet service accidentally made a copy of your Ferrari’s key and uploaded it to the cloud. A thief got hold of it, walked into the parking garage, and drove off with your car. It wasn’t the car maker’s fault, but you still lost the car.

On August 2nd, 2022, the Slop mobile wallet app inadvertently transmitted users’ private key data to a remote telemetry server. This sensitive data was either intercepted or accessed by a malicious actor, resulting in compromised wallets.

This incident drained 9231 wallets and $4.1M funds got stolen. The trust sentiment on Solana shook. Popular wallet apps like Phantom and Solfare were also impacted if they reused seed phrases from Slope.

Solana Labs responded with an investigation via audit and security firms. Slope Finance issued public advisories and started their own audit. Users were urged to abandon old wallets and transfer funds to fresh ones.

This incident taught that wallets are critical security layers. Even well-used wallets can introduce systematic risk if poorly implemented. Seed phrase reuse across wallets is highly dangerous.

This incident primarily impacted on retail users and $4.1M stolen and unrecovered.

Incident #8: Duplicate Block Bug

The Ferrari’s backup driver jumped in the race at the same time as the main driver, both raced ahead on different tracks but wore the same number. The rest of the racers became confused about who to follow in the race. Race control paused everything until the real lead car was found.

The root issue is validator’s main and backup nodes activated simultaneously, producing duplicate blocks using the same identity. Due to a bug fork choice logic, other validators couldn’t switch to the correct fork when conflicts arose, freezing consensus.

It caused 8.5 hours of network downtime. Validator and community coordinated a restart after identifying and issuing a patch to fix the bug.

This incident enabled us to improve validator identity handling for fallback nodes. And to handle duplicate slot ancestors properly. It caused a full halt of network activity.

Incident #9: Mango Markets Exploit - A DeFi Layer Exploit

A driver legally rented a Ferrari, rigged the GPS to think he owned ten more, then used that fake value to rent the entire fleet and sell them off. It wasn’t a break-in; it was a loophole in the rental system. The dealership was left with no cars and a gaping hole in its books.

The incident happened on October 11, 2022. The root cause was the Mango’s token price manipulation via low-liquidity markets inflated collateral value, allowing attacker to borrow far more than deposited.

The attacker manipulated MNGO’s thin liquidity on external exchanges, spiking its oracle price.

This caused $117M drain. The platform became insolvent and had to pause all operations. The DAO in response, negotiated directly with the attacker. $67M returned after a community vote accepted the terms. The U.S. DOJ later arrested Avraham Eisenberg on charges of market manipulation.

Although with this incident, Solana’s core was not compromised, the broader perception that Solana isn’t safe took a hit.

The exploit occurred due to Mango’s smart contract design and economic assumptions. The outcome of the incident is to focus not only on code audits, but on economic resilience and oracle robustness.

Users lost $50M (unrecovered) and the remaining funds got redistributed to users based on vote.

Incident #10: Large Block Overwhelms Turbine

One driver hacked their Ferrari’s engine to output 1000x more fuel packets than normal. The racetrack clogged, the pit crew panicked, and everyone was forced to pull over while the mess was cleared. Now, fuel packets are capped and filtered more strictly.

This incident occurred in February 2023. A validator’s custom shred-forwarding malfunctioned, transmitting a massive block (~150,000 shreds) for larger than normal. The block wasn’t filtered out upstream, and due to deduplication logic failure, it kept repropagating through the network.

It caused ~19 hours of downtime. Turbine, data propagation layer of Solana was overwhelmed.

The incident was responded through manual restart with validator community.

Engineers enhanced deduplication and filtering logic in shred-forwarding layer. Added limited on block size generation. It improved validation to prevent looping propagation in Turbine.

Incident #11: Infinite Recompile Loop

The car’s new smart engine would sometimes forget that a component had already been installed — and kept trying to re-install it while racing. This created a feedback loop that bricked every car on the track. Engineers disabled the old parts and fixed the installation system.

In February 2024, a bug in Agave v1.16’s program caching system caused validators to endlessly recompile legacy loader programs. When these programs were evicted from cache but re-triggered with old slot metadata, they would be treated as unloaded repeatedly. This created a cluster-wide infinite loop.

It had caused ~5 hours of downtime. 95% of validator stake stalled due to shared replay of faulty block. Agave v1.17 made the situation worse by removing cooperative loading safeguards.

The key outcomes are that legacy support must be handled cautiously in performance-critical layers. Determinism and cache-awareness are critical to consensus safety. This incident did not cause any loss of funds but impacted majorly on validators.

Incident #12: Coordinated ELF Vulnerability Patch

Someone discovered a way to build a fake engine part that would crash any Ferrari, it was installed in. Before the info leaked, the engineers secretly coordinated a patch across garages worldwide. The fix was deployed quietly and successfully, before any sabotage further took place.

On August 2024, an ELF (Executable and Linkable Format) bug allowed Solana programs to define misaligned .text sections, leading to validator crashes via invalid memory access. If exploited, this could halt the network by crashing multiple leaders in a row.

It did not cause any downtime, but risk of catastrophic failure was imminent. It required stealth coordination to deploy fix before public disclosure

Solana Foundation and core engineers privately alerted validator operators. A hashed message was shared to prove coordination authenticity

Post the incident, ELF sanitization improved to validate section alignments

The learning outcomes could be that decentralized coordination remains a human logistics challenge. The line between coordination and centralization must be navigated transparently

Incident #13: npm Malware in Solana Web3.js

Imagine a wrench tool set used in Ferrari garages, which was tampered with. Every time it touched an engine, it copied the keys to the ignition. For five hours, every garage using the updated wrench set unknowingly handed out free keys to thieves.

On December 3rd, 2024, a publish-access account for @solana/web3.js was compromised, leading to the upload of malicious packaging versions (v1.95.6 and v1.95.7) that exfiltrated private keys from apps that handled them directly (ex- bots, backends, custodial services).

It did not impact non-custodial wallets. The malicious versions were identified and unpublished within 5 hours.

This incident enhanced awareness around client SDK security. The community urged to pin dependencies by version.

The learning outcome is that supply chain attacks can be as damaging as protocol exploits. And projects should minimize the direct handling of private keys.

Thoughts

Rather than just tracking uptime, Solana’s story is that of - increasing resilience through accumulated scars. Each outage was a high-stakes, real-world stress test, revealing weakness not just in code but in coordination, monitoring, and community expectations.

However, if we observe, there is diversity of failure modes on Solana. This blockchain is tackling a wider surface area than most chains.

Across nearly every major incident, one pattern reoccurred – the coordination of validator response on Solana’s network. The validator ecosystem on Solana has consistently demonstrated that social coordination is part of its technical stack.

Folks in this space often argue decentralization as the measure of a protocol’s robustness, but Solana’s experience suggests another metric: how quickly can you mobilize resilience when things go wrong?

Ultimately, Solana’s greatest strength may not be its throughput or design choices, but its willingness to survive with persistence. Each incident was a test to network’s capabilities. Solana remains as a unique blockchain that battled-tested more security incidents and resolved them. It has a very strong supportive growing community to keep its supercar engine running!! 😉😉

Feel free to contact me abhijith_psr on X for any suggestions or if you have any opinions. If you find this slightly insightful, please share it.